The statue of Alan Turing at Bletchley Park, UK.Credit: Steve Meddle/Shutterstock

Today’s best artificial intelligence (AI) models sail through the Turing test, a famous thought experiment that asks whether a computer can pass as a human by interacting via text.

Some see an upgraded test as a necessary benchmark for progress towards artificial general intelligence (AGI) — an ambiguous term used by many technology firms to mean an AI system with the resourcefulness to match any human cognitive ability. But at an event at London’s Royal Society on 2 October, several researchers said that the Turing test should be scrapped altogether, and that developers should instead focus on evaluating AI safety and building specific capabilities that could be of benefit to the public.

“Let’s figure out the kind of AI we want, and test for those things instead,” said Anil Seth, a neuroscientist at the University of Sussex in Brighton, UK. In focusing on “this march towards AGI, I think we’re really limiting our imaginations about what kind of systems we might want — and, more importantly, what kinds of systems we really don’t want — in our society”.

The event was organized to mark the 75-year anniversary of the publication of British mathematician Alan Turing’s seminal paper describing the test, which he called the imitation game. Addressing the philosophically gnarly question of whether machines can think1, the test involves a series of short, text-based conversations between a judge and either a human or a computer. To win, the machine must convince the judge that it is human.

ChatGPT broke the Turing test — the race is on for new ways to assess AI

The meeting’s low-hype approach to machine intelligence proved popular. At the oversubscribed event, speaker Gary Marcus was introduced by Peter Gabriel, the frontman of the rock band Genesis, and, as a personal friend of Marcus, Matrix star Laurence Fishburne had a spot in the audience. More than 1,000 people also watched the event online.

“The idea of AGI might not even be the right goal, at least not now,” said Marcus, a neuroscientist at New York University in New York City, during one of the keynote addresses. Some of the best AI models are highly specialized, such as AlphaFold, Google DeepMind’s protein-structure predictor, he said. “It does a single thing. It does not try to write sonnets,” he said.

Beyond Turing

Turing’s playful thought experiment has often been used as a gauge of machine intelligence, but it was never meant as a serious or practical test, said Sarah Dillon, a literature researcher at the University of Cambridge, UK, who studies the mathematician’s works.

Some of today’s most capable AI systems are refined versions of large language models (LLMs) that predict text on the basis of associations made in learning from internet data. In March, researchers tested four chatbots in a version of the Turing test, and found that the best models passed.

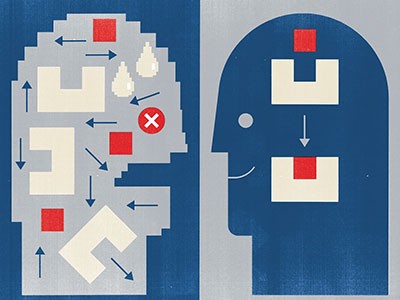

Yet the fact that chatbots can imitate speech credibly does not mean that they can understand, said several researchers at the event. Although LLM responses can be uncannily human, “when you go outside the box of what you normally ask of these systems, they have a lot of trouble,” said Marcus. As examples, he cited the inability of some models to label the parts on an elephant correctly, or to draw clock hands in anywhere but the ten and two positions. For this reason, models could still fail the Turing test if they are challenged by a scientist who knows their weaknesses.

AI researcher Gary Marcus (left) with actor Laurence Fishburne at the Turing event.Credit: Courtesy of the Web Science Institute at the University of Southampton

Still, the rapid improvement of LLM-based systems in a broad range of areas, especially reasoning tasks, has prompted speculation about whether machines will soon reach human-level performance on cognitive tests. To chart AI’s growing capabilities, and to capture non-language-based skills, researchers have sought to construct harder tests. One of the most recent is the second version of the puzzle-based Abstract and Reasoning Corpus for AGI (ARC-AGI-2), which is supposed to assess an AI’s ability to adapt efficiently to new problems. Such tests are often framed as milestones on the route to general intelligence, but researchers don’t agree on any one benchmark for achieving AGI.