Nature, Published online: 19 June 2024; doi:10.1038/d41586-024-01641-0

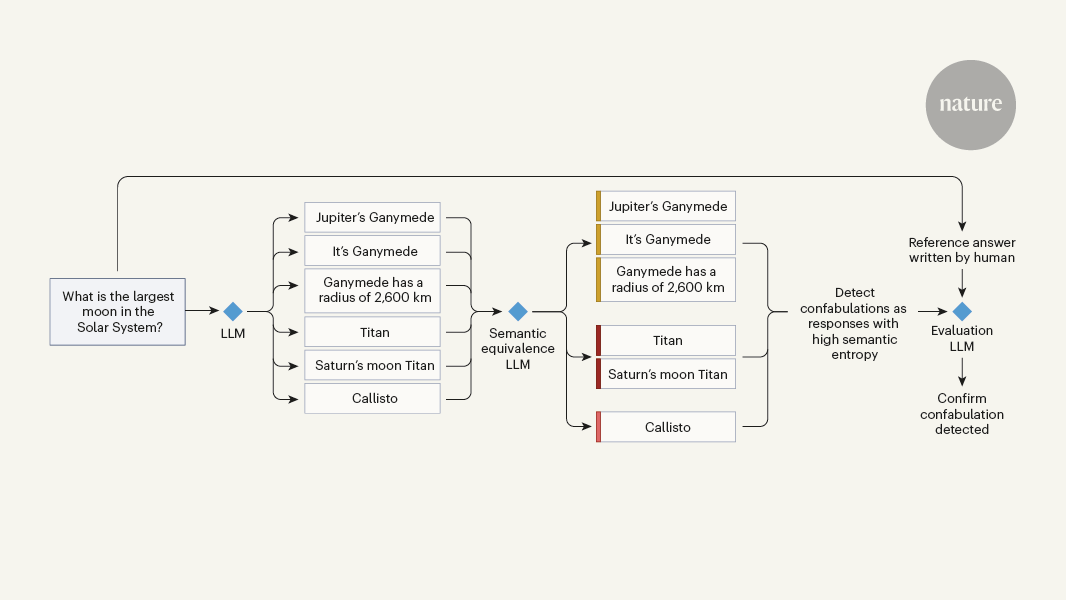

The number of errors produced by an LLM can be reduced by grouping its outputs into semantically similar clusters. Remarkably, this task can be performed by a second LLM, and the method’s efficacy can be evaluated by a third.

Source link