Study participants

All procedures and studies were carried out in accordance with the Massachusetts General Hospital Institutional Review Board and in strict adherence to Harvard Medical School guidelines. All participants included in the study were scheduled to undergo planned awake intraoperative neurophysiology and single-neuronal recordings for deep brain stimulation targeting. Consideration for surgery was made by a multidisciplinary team including neurologists, neurosurgeons and neuropsychologists18,19,55,56,57. The decision to carry out surgery was made independently of study candidacy or enrolment. Further, all microelectrode entry points and placements were based purely on planned clinical targeting and were made independently of any study consideration.

Once and only after a patient was consented and scheduled for surgery, their candidacy for participation in the study was reviewed with respect to the following inclusion criteria: 18 years of age or older, right-hand dominant, capacity to provide informed consent for study participation and demonstration of English fluency. To evaluate for language comprehension and the capacity to participate in the study, the participants were given randomly sampled sentences and were then asked questions about them (for example, “Eva placed a secret message in a bottle” followed by “What was placed in the bottle?”). Participants not able to answer all questions on testing were excluded from consideration. All participants gave informed consent to participate in the study and were free to withdraw at any point without consequence to clinical care. A total of 13 participants were enrolled (Extended Data Table 1). No participant blinding or randomization was used.

Neuronal recordings

Acute intraoperative single-neuronal recordings

Microelectrode recording were performed in participants undergoing planned deep brain stimulator placement19,58. During standard intraoperative recordings before deep brain stimulator placement, microelectrode arrays are used to record neuronal activity. Before clinical recordings and deep brain stimulator placement, recordings were transiently made from the cortical ribbon at the planned clinical placement site. These recordings were largely centred along the superior posterior middle frontal gyrus within the dorsal prefrontal cortex of the language-dominant hemisphere. Here each participant’s computed tomography scan was co-registered to their magnetic resonance imaging scan, and a segmentation and normalization procedure was carried out to bring native brains into Montreal Neurological Institute space. Recording locations were then confirmed using SPM12 software and were visualized on a standard three-dimensional rendered brain (spm152). The Montreal Neurological Institute coordinates for recordings are provided in Extended Data Table 1, top.

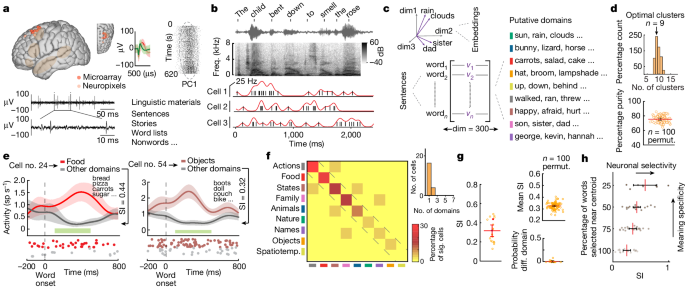

We used two main approaches to perform single-neuronal recordings from the cortex18,19. Altogether, ten participants underwent recordings using tungsten microarrays (Neuroprobe, Alpha Omega Engineering) and three underwent recordings using linear silicon microelectrode arrays (Neuropixels, IMEC). For the tungsten microarray recordings, we incorporated a Food and Drug Administration-approved, biodegradable, fibrin sealant that was first placed temporarily between the cortical surface and the inner table of the skull (Tisseel, Baxter). Next, we incrementally advanced an array of up to five tungsten microelectrodes (500–1,500 kΩ; Alpha Omega Engineering) into the cortical ribbon at 10–100 µm increments to identify and isolate individual units. Once putative units were identified, the microelectrodes were held in position for a few minutes to confirm signal stability (we did not screen putative neurons for task responsiveness). Here neuronal signals were recorded using a Neuro Omega system (Alpha Omega Engineering) that sampled the neuronal data at 44 kHz. Neuronal signals were amplified, band-pass-filtered (300 Hz and 6 kHz) and stored off-line. Most individuals underwent two recording sessions. After neural recordings from the cortex were completed, subcortical neuronal recordings and deep brain stimulator placement proceeded as planned.

For the silicon microelectrode recordings, sterile Neuropixels probes31 (version 1.0-S, IMEC, ethylene oxide sterilized by BioSeal) were advanced into the cortical ribbon with a manipulator connected to a ROSA ONE Brain (Zimmer Biomet) robotic arm. The probes (width: 70 µm, length: 10 mm, thickness: 100 µm) consisted of 960 contact sites (384 preselected recording channels) that were laid out in a chequerboard pattern. A 3B2 IMEC headstage was connected via a multiplexed cable to a PXIe acquisition module card (IMEC), installed into a PXIe chassis (PXIe-1071 chassis, National Instruments). Neuropixels recordings were performed using OpenEphys (versions 0.5.3.1 and 0.6.0; https://open-ephys.org/) on a computer connected to the PXIe acquisition module recording the action potential band (band-pass-filtered from 0.3 to 10 kHz, sampled at 30 kHz) as well as the local field potential band (band-pass-filtered from 0.5 to 500 Hz, sampled at 2,500 Hz). Once putative units were identified, the Neuropixels probe was held in position briefly to confirm signal stability (we did not screen putative neurons for speech responsiveness). Additional description of this recording approach can be found in refs. 20,30,31. After completing single-neuronal recordings from the cortical ribbon, the Neuropixels probe was removed, and subcortical neuronal recordings and deep brain stimulator placement proceeded as planned.

Single-unit isolation

For the tungsten microarray recordings, putative units were identified and sorted off-line through a Plexon workstation. To allow for consistency across recording techniques (that is, with the Neuropixels recordings), a semi-automated valley-seeking approach was used to classify the action potential activities of putative neurons and only well-isolated single units were used. Here, the action potentials were sorted to allow for comparable isolation distances across recording techniques59,60,61,62,63 and unit selection with previous approaches27,28,29,64,65, and to limit the inclusion of multi-unit activity (MUA). Candidate clusters of putative neurons needed to clearly separate from channel noise, display a voltage waveform consistent with that of a cortical neuron, and have 99% or more of action potentials separated by an inter-spike interval of at least 1 ms (Extended Data Fig. 1b,d). Units with clear instability were removed and any extended periods (for example, greater than 20 sentences) of little to no spiking activity were excluded from the analysis. In total, 18 recording sessions were carried out, for an average of 5.4 units per session per multielectrode array (Extended Data Fig. 1a,b).

For the Neuropixels recordings, putative units were identified and sorted off-line using Kilosort and only well-isolated single units were used. We used Decentralized Registration of Electrophysiology Data (DREDge; https://github.com/evarol/DREDge) software and an interpolation approach (https://github.com/williamunoz/InterpolationAfterDREDge) to motion correct the signal using an automated protocol that tracked local field potential voltages using a decentralized correlation technique that realigned the recording channels in relation to brain movements31,66. Following this, we interpolated the continuous voltage data from the action potential band using the DREDge motion estimate to allow the activities of the recorded units to be stably tracked over time. Finally, putative neurons were identified from the motion-corrected interpolated signal using a semi-automated Kilosort spike sorting approach (version 1.0; https://github.com/cortex-lab/KiloSort) followed by Phy for cluster curation (version 2.0a1; https://github.com/cortex-lab/phy). Here, an n-trode approach was used to optimize the isolation of single units and limit the inclusion of MUA67,68. Units with clear instability were removed and any extended periods (for example, greater than 20 sentences) of little to no spiking activity were excluded from analysis. In total, 3 recording sessions were carried out, for an average of 51.3 units per session per multielectrode array (Extended Data Fig. 1c,d).

Multi-unit isolation

To provide comparison to the single-neuronal data, we also separately analysed MUA. These MUAs reflect the combined activities of multiple putative neurons recorded from the same electrodes as represented by their distinct waveforms57,69,70. These MUAs were obtained by separating all recorded spikes from their baseline noise. Unlike for the single units, the spikes were not separated on the basis of their waveform morphologies.

Audio presentation and recordings

The linguistic materials were given to the participants in audio format using a Python script utilizing the PyAudio library (version 0.2.11). Audio signals were sampled at 22 kHz using two microphones (Shure, PG48) that were integrated into the Alpha Omega rig for high-fidelity temporal alignment with neuronal data. Audio recordings were annotated in semi-automated fashion (Audacity; version 2.3). For the Neuropixels recordings, audio recordings were carried out at a 44 kHz sampling frequency (TASCAM DR-40× 4-channel 4-track portable audio recorder and USB interface with adjustable microphone). To further ensure granular time alignment for each word token with neuronal activity, the amplitude waveform of each session recording and the pre-recorded linguistic materials were cross-correlated to identify the time offset. Finally, for additional confirmation, the occurrence of each word token and its timing was validated manually. Together, these measures allowed for the millisecond-level alignment of neuronal activity with each word occurrence as they were heard by the participants during the tasks.

Linguistic materials

Sentences

The participants were presented with eight-word-long sentences (for example, “The child bent down to smell the rose”; Extended Data Table 1) that provided a broad sample of semantically diverse words across a wide variety of thematic contents and contexts4. To confirm that the participants were paying attention, a brief prompt was used every 10–15 sentences asking them whether we could proceed with the next sentence (the participants generally responded within 1–2 seconds).

Homophone pairs

Homophone pairs were used to evaluate for meaning-specific changes in neural activity independently of phonetic content. All of the homophones came from sentence experiments in which homophones were available and in which the words within the homophone pairs came from different semantic domains. Homophones (for example, ‘sun’ and ‘son’; Extended Data Table 1), rather than homographs, were used as the word embeddings produce a unique vector for each unique token rather than for each token sense.

Word lists

A word-list control was used to evaluate the effect that sentence context had on neuronal response. These word lists (for example, “to pirate with in bike took is one”; Extended Data Table 1) contained the same words as those given during the presentation of sentences and were eight words long, but they were given in a random order, therefore removing any effect that linguistic context had on lexico-semantic processing.

Nonwords

A nonword control was used to evaluate the selectivity of neuronal responses to semantic (linguistically meaningful) versus non-semantic stimuli. Here the participants were given a set of nonwords such as ‘blicket’ or ‘florp’ (sets of eight) that sounded phonetically like words but held no meaning.

Story narratives

Excerpts from a story narrative were introduced at the end of recordings to evaluate for the consistency of neuronal response. Here, instead of the eight-word-long sentences, the participants were given a brief story about the life and history of Elvis Presley (for example, “At ten years old, I could not figure out what it was that this Elvis Presley guy had that the rest of us boys did not have”; Extended Data Table 1). This story was selected because it was naturalistic, contained new words, and was stylistically and thematically different from the preceding sentences.

Word embedding and clustering procedures

Spectral clustering of semantic vectors

To study the selectivity of neurons to words within specific semantic domains, all unique words heard by the participants were clustered into groups using a word embedding approach35,37,39,42. Here we used 300-dimensional vectors extracted from a pretrained dataset generated using a skip-gram Word2Vec11 algorithm on a corpus of 100 billion words. Each unique word from the sentences was then paired with its corresponding vector in a case-insensitive fashion using the Python Gensim library (version 3.4.0; Fig. 1c, left). High unigram frequency words (log probability of greater than 2.5), such as ‘a’, ‘an’ or ‘and’, that held little linguistic meaning were removed.

Next, to group words heard by the participants into representative semantic domains, we used a spherical clustering algorithm (v.0.1.7, Python 3.6) that used the cosine distance between their representative vectors. We then carried out a k-means clustering procedure in this new space to obtain distinct word clusters. This approach therefore grouped words on the basis of their vectoral distance, reflecting the semantic relatedness between words37,40, which has been shown to work well for obtaining consistent word clusters34,71. Using pseudorandom initiation cluster seeding, the k-means procedure was repeated 100 times to generate a distribution of values for the optimal number of cluster. For each iteration, a silhouette criterion for cluster number between 5 and 20 was calculated. The cluster with the greatest average criterion value (as well as the most frequent value) was 9, which was taken as the optimal number of clusters for the linguistic materials used34,37,43,44.

Confirming the quality and separability of the semantic domains

Purity measures and d′ analysis were used to confirm the quality and separability of the semantic domains. To this end, we randomly sampled from 60% of the sentences across 100 iterations. We then grouped all words from these subsampled sentences into clusters using the same spherical clustering procedure described above. The new clusters were then matched to the original clusters by considering all possible matching arrangements and choosing the arrangement with greatest word overlap. Finally, the clustering quality was evaluated for ‘purity’, which is the percentage of the total number of words that were classified correctly72. This procedure is therefore a simple and transparent measure that varies between 0 (bad clustering) to 1 (perfect clustering; Fig. 1d, bottom). The accuracy of this assignment is determined by counting the total number of correctly assigned words and dividing by the total number of words in the new clusters:

$$\text{purity}\left(\Omega ,{\mathbb{C}}\right)=\frac{1}{n}\mathop{\sum }\limits_{i=1}^{k}{\max }_{j}\left|{\omega }_{i}\cap {c}_{j}\right|$$

in which n is the total number of words in the new clusters, k is the number of clusters (that is, 9), \({\omega }_{i}\) is a cluster from the set of new clusters \(\Omega \), and \({c}_{j}\) is the original cluster (from the set of original clusters \({\mathbb{C}}\)) that has the maximum count for cluster \({\omega }_{i}\). Finally, to confirm the separability of the clusters, we used a standard d′ analysis. The d′ metric estimates the difference between vectoral cosine distances for all words assigned to a particular cluster compared to those assigned to all other clusters (Extended Data Fig. 2a).

The resulting clusters were labelled here on the basis of the preponderance of words near the centroid of each cluster. Therefore, although not all words may seem to intuitively fit within each domain, the resulting semantic domains reflected the optimal vectoral clustering of words based on their semantic relatedness. To further allow for comparison, we also introduced refined semantic domains (Extended Data Table 2) in which the words provided within each cluster were additionally manually reassigned or removed by two independent study members on the basis of their subjective semantic relatedness. Thus, for example, under the semantic domain labelled ‘animals’, any word that did not refer to an animal was removed.

Neuronal analysis

Evaluating the responses of neurons to semantic domains

To evaluate the selectivity of neurons to words within the different semantic domains, we calculated their firing rates aligned to each word onset. To determine significance, we compared the activity of each neuron for words that belonged to a particular semantic domain (for example, ‘food’) to that for words from all other semantic domains (for example, all domains except for ‘food’). Using a two-sided rank-sum test, we then evaluated whether activity for words in that semantic domain was significantly different from activity in all semantic domains, with the P value being false discovery rate-adjusted using a Benjamini–Hochberg method to account for repeated comparisons across all of the nine domains. Thus, for example, when stating that a neuron exhibited significant selectivity to the domain of ‘food’, this meant that it exhibited a significant difference in its activity for words within that domain when compared to all other words (that is, it responded selectively to words that described food items).

Next we determined the SI of each neuron, which quantified the degree to which it responded to words within specific semantic domains compared to the others. Here SI was defined by the cell’s ability to differentiate words within a particular semantic domain (for example, ‘food’) compared to all others and reflected the degree of modulation. The SI for each neuron was calculated as

$${\rm{SI}}=\frac{\left|{{\rm{FR}}}_{{\rm{domain}}}-{{\rm{FR}}}_{{\rm{other}}}\right|}{\left|{{\rm{FR}}}_{{\rm{domain}}}+{{\rm{FR}}}_{{\rm{other}}}\right|}$$

in which \({{\rm{FR}}}_{{\rm{domain}}}\) is the neuron’s average firing rate in response to words within the considered domain and \({{\rm{FR}}}_{{\rm{other}}}\) is the average firing rate in response to words outside the considered domain. The SI therefore reflects the magnitude of effect based on the absolute difference in activity for each neuron’s preferred semantic domain compared to others. Therefore, the output of the function is bounded by 0 and 1. An SI of 0 would mean that there is no difference in activity across any of the semantic domains (that is, the neuron exhibits no selectivity) whereas an SI of 1.0 would indicate that a neuron changed its action potential activity only when hearing words within one of the semantic domains.

A bootstrap analysis was used to further confirm reliability of each neuron’s SI across linguistic materials in two parts. For the first approach, the words were randomly split into 60:40% subsets (repeated 100 times) and the SI of semantically selective neurons was compared in both subsets of words. For the second, instead of using the mean SI, we calculated the proportion of times that a neuron exhibited selectivity for another category other than their preferred domain when randomly selecting words from 60% of the sentences.

Confirming the consistency of neuronal response across analysis windows

The consistency of neuronal response across analysis windows was confirmed in two parts. The average time interval between the beginning of one word and the next was 341 ± 5 ms. For all primary analysis, neuronal responses were analysed in 400-ms windows, aligned to each word, with a 100-ms time-lag to further account for the evoked response delay of prefrontal neurons. To further confirm the consistency of semantic selectivity, we first examined neuronal responses using 350-ms and 450-ms time windows. Combining recordings across all 13 participants, a similar proportion of cells exhibiting selectivity was observed when varying the window size by ±50 ms (17% and 15%, χ2(1, 861) = 0.43, P = 0.81) suggesting that the precise window of analysis did not markedly affect these results. Second, we confirmed that possible overlap between words did not affect neuronal selectivity by repeating our analyses but now evaluated only non-neighbouring content words within each sentence. Thus, for example, for the sentence “The child bent down to smell the rose”, we would evaluate only non-neighbouring words (for example, child, down and so on) per sentence. Using this approach, we find that the SI for non-overlapping windows (that is, every other word) was not significantly different from the original SIs (0.41 ± 0.03 versus 0.38 ± 0.02, t = 0.73, P = 0.47); together confirming that potential overlap between words did not affect the observed selectivity.

Model decoding performance and the robustness of neuronal response

To evaluate the degree to which semantic domains could be predicted from neuronal activity on a per-word level, we randomly sampled words from 60% of the sentences and then used the remaining 40% for validation across 1,000 iterations. Only candidate neurons that exhibited significant semantic selectivity and for which sufficient words and sentences were recorded were used for decoding purposes (43 of 48 total selective neurons). For these, we concatenated all of the candidate neurons from all participants together with their firing rates as independent variables, and predicted the semantic domains of words (dependent variable). Support vector classifiers (SVCs) were then used to predict the semantic domains to which the validation words belonged. These SVCs were constructed to find the optimal hyperplanes that best separated the data by performing

$$\mathop{min}\limits_{w,b,\zeta }\left(\frac{1}{2}{w}^{{\rm{T}}}{\rm{w}}+{\rm{C}}\mathop{\sum }\limits_{{\rm{i}}=1}^{{\rm{n}}}{\zeta }_{{\rm{i}}}\right)$$

subject to

$${y}_{i}({w}^{{\rm{T}}}\varphi ({x}_{i})+b)\,\ge 1-{\zeta }_{i}$$

in which \(y\in {\left\{1,-1\right\}}^{n}\), corresponding to the classification of individual words, \(x\) is the neural activity, and \({{\rm{\zeta }}}_{i}=\max \left(0,\,1-{y}_{i}\left(w{x}_{i}-b\right)\right)\). The regularization parameter C was set to 1. We used a linear kernel and ‘balanced’ class weight to account for the inhomogeneous distribution of words across the different domains. Finally, after the SVCs were modelled on the bootstrapped training data, decoding accuracy for the models was determined by using words randomly sampled and bootstrapped from the validation data. We further generated a null distribution by calculating the accuracy of the classifier after randomly shuffling the cluster labels on 1,000 different permutations of the dataset. These models therefore together determine the most likely semantic domain from the combined activity patterns of all selective neurons. An empirical P value was then calculated as the percentage of permutations for which the decoding accuracy from the shuffled data was greater than the average score obtained using the original data. The statistical significance was determined at P value < 0.05.

Quantifying the specificity of neuronal response

To quantify the specificity of neuronal response, we carried out two procedures. First, we reduce the number of words from each domain from 100% to 25% on the basis of their vectoral cosine distance from each of their respective domains’ centroid. Thus, for each domain, words that were closest to its centroid, and therefore most similar in meaning, were kept whereas words farther away were removed. The SIs of the neurons were then recalculated as before (Fig. 1h). Second, we repeated the decoding procedure but now varied the number of semantic domains from 2 to 20. Thus, a higher number of domains would mean fewer words per domain (that is, increased specificity of meaning relatedness) whereas a smaller number of domains would mean more words per domain. These decoders used 60% of words for model training and 40% for validation (200 iterations). Next, to evaluate the degree to which neuron and domain number led to improvement in decoding performance, models were trained for all combinations of domain numbers (2 to 20) and neuron numbers (1 to 133) using a nested loop. For control comparison, we repeated the decoding analysis but randomly shuffled the relation between neuronal response and each word as above. The percentage improvement in prediction accuracy (PA) for a given domain number (d) and neuronal size (n) was calculated as

$${\rm{improvement}}\left(d,\,n\right)=100\times \frac{\left[{{\rm{PA}}}_{{\rm{actual}}}\left(d,\,n\right)-{{\rm{PA}}}_{{\rm{shuffle}}}\left(d,\,n\right)\right]}{{{\rm{PA}}}_{{\rm{actual}}}\left(d,\,n\right)}$$

Evaluating the context dependency of neuronal response using homophone pairs

We compared the responses of neurons to homophone pairs to evaluate the context dependency of neuronal response and to further confirm the specificity of meaning representations. For example, if the neurons simply responded to differences in phonetic input rather than meaning, then we should expect to see smaller differences in firing rate between homophone pairs that sounded the same but differed in meaning (for example, ‘sun’ and ‘son’) compared to non-homophone pairs that sounded different but shared similar meaning (for example, ‘son’ and ‘sister’). Here, only homophones that belonged to different semantic domains were included for analysis. A permutation test was used to compare the distributions of the absolute difference in firing rates between homophone pairs (sample x) and non-homophone pairs (sample y) across semantically selective cells (P < 0.01). To carry out the permutation test, we first calculated the mean difference between the two distributions (sample x and y) as the test statistic. Then, we pooled all of the measurements from both samples into a single dataset and randomly divided it into two new samples x′ and y′ of the same size as the original samples. We repeated this process 10,000 times, each time computing the difference in the mean of x′ and y′ to create a distribution of possible differences under the null hypothesis. Finally, we computed the two-sided P value as the proportion of permutations for which the absolute difference was greater than or equal to the absolute value of the test statistic. A one-tailed t-test was used to further evaluate for differences in the distribution of firing rates for homophones versus non-homophone pairs (P < 0.001). To allow for comparison, 2 of the 133 neurons did not have homophone trials and were therefore excluded from analysis. An additional 16 neurons were also excluded for lack of response and/or for lying outside (>2.5 times) the interquartile range.

Evaluating the context dependency of neuronal response using surprisal analysis

Information theoretic metrics such as ‘surprisal’ define the degree to which a word can be predicted on the basis of its antecedent sentence context. To examine how the preceding context of each word modulated neuronal response on a per-word level, we quantified the surprisal of each word as follows:

$${\rm{surprisal}}\left({w}_{i}\right)=-\log P({w}_{i}{\rm{| }}{w}_{1}\ldots {w}_{i-1})$$

in which P represents the probability of the current word (w) at position i within a sentence. Here, a pretrained long short-term memory recurrent neural network was used to estimate P(wi | w1…wi−1)73. Words that are more predictable on the basis of their preceding context would therefore have a low surprisal whereas words that are poorly predictable would have a high surprisal.

Next we examined how surprisal affected the ability of the neurons to accurately predict the correct semantic domains on a per-word level. To this end, we used SVC models similar to that described above, but now divided decoding performances between words that exhibited high versus low surprisal. Therefore, if the meaning representations of words were indeed modulated by sentence context, words that are more predictable on the basis of their preceding context should exhibit a higher decoding performance (that is, we should be able to predict their correct meaning more accurately from neuronal response).

Determining the relation between the word embedding space and neural response

To evaluate the organization of semantic representations within the neural population, we regressed the activity of each neuron onto the 300-dimensional embedded vectors. The normalized firing rate of each neuron was modelled as a linear combination of word embedding elements such that

$${F}_{i,w}={v}_{w}{\theta }_{i}+{\varepsilon }_{i}$$

in which \({F}_{i,w}\) is the firing rate of the ith neuron aligned to the onset of each word w, \({\theta }_{i}\) is a column vector of optimized linear regression coefficients, \({v}_{w}\) is the 300-dimensional word embedding row vector associated with word w, and \({\varepsilon }_{i}\) is the residual for the model. On a per-neuron basis, \({\theta }_{i}\) was estimated using regularized linear regression that was trained using least-squares error calculation with a ridge penalization parameter λ = 0.0001. The model values, \({\theta }_{i}\), of each neuron (dimension = 1 × 300) were then concatenated (dimension = 133 × 300) to define a putative neuronal–semantic space θ. Together, these can therefore be interpreted as the contribution of a particular dimension in the embedding space to the activity of a given neuron, such that the resulting transformation matrix reflects the semantic space represented by the neuronal population.

Finally, a PC analysis was used to dimensionally reduce θ along the neuronal dimension. This resulted in an intermediately reduced space (θpca) consisting of five PCs, each with dimension = 300, together accounting for approximately 46% of the explained variance (81% for the semantically selective neurons). As this procedure preserved the dimension with respect to the embedding length, the relative positions of words within this space could therefore be determined by projecting word embeddings along each of the PCs. Last, to quantify the degree to which the relation between word projections derived from this PC space (neuronal data) correlated with those derived from the word embedding space (English word corpus), we calculated their correlation across all word pairs. From a possible 258,121 word pairs (the availability of specific word pairs differed across participants), we compared the cosine distances between neuronal and word embedding projections.

Estimating the hierarchical structure and relation between word projections

As word projections in our PC space were vectoral representations, we could also calculate their hierarchical relations. Here we carried out an agglomerative single-linkage (that is, nearest neighbour) hierarchical clustering procedure to construct a dendrogram that represented the semantic relationships between all word projections in our PC space. We also investigated the correlation between the cophenetic distance in the word embedding space and difference in neuronal activity across all word pairs. The cophenetic distance between a word pair is a measure of inter-cluster dissimilarity and is defined as the distance between the largest two clusters that contain the two words individually when they are merged into a single cluster that contains both49,50,51. Intuitively, the cophenetic distance between a word pair reflects the height of the dendrogram where the two branches that include these two words merge into a single branch. Therefore, to further evaluate whether and to what degree neuronal activity reflected the hierarchical semantic relationship between words, as observed in English, we also examined the cophenetic distances in the 300-dimension word embedding space. For each word pair, we calculated the difference in neuronal activity (that is, the absolute difference between average normalized firing rates for these words across the population) and then assessed how these differences correlated with the cophenetic distances between words derived from the word embedding space. These analyses were performed on the population of semantically selective neurons (n = 19). For further individual participant comparisons, the cophenetic distances were binned more finely and outliers were excluded to allow for comparison across participants.

t-stochastic neighbour embedding procedure

To visualize the organization of word projections obtained from the PC analysis at the level of the population (n = 133), we carried out a t-distributed stochastic neighbour embedding procedure that transformed each word projection into a new two-dimensional embedding space θtsne (ref. 74). This transformation utilized cosine distances between word projections as derived from the neural data.

Non-embedding approach for quantifying the semantic relationship between words

To further validate our results using a non-embedding approach, we used WordNet similarity metrics75. Unlike embedding approaches, which are based on the modelling of vast language corpora, WordNet is a database of semantic relationships whereby words are organized into ‘synsets’ on the basis of similarities in their meaning (for example, ‘canine’ is a hypernym of ‘dog’ but ‘dog’ is also a coordinate term of ‘wolf’ and so on). Therefore, although synsets do not provide vectoral representations that can be used to evaluate neuronal response to specific semantic domains, they do provide a quantifiable measure of word similarity75 that can be regressed onto neuronal activity.

Confirming the robustness of neuronal response across participants

Finally, to ensure that our results were not driven by any particular participant(s), we carried out a leave-one-out cross-validation participant-dropping procedure. Here we repeated several of the analyses described above but now sequentially removed individual participants (that is, participants 1–10) across 1,000 iterations. Therefore, if any particular participant or group of participants disproportionally contributed to the results, their removal would significantly affect them (one-way analysis of variance, P < 0.05). A χ2 test (P < 0.05) was used to further evaluate for differences in the distribution of neurons across participants.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.